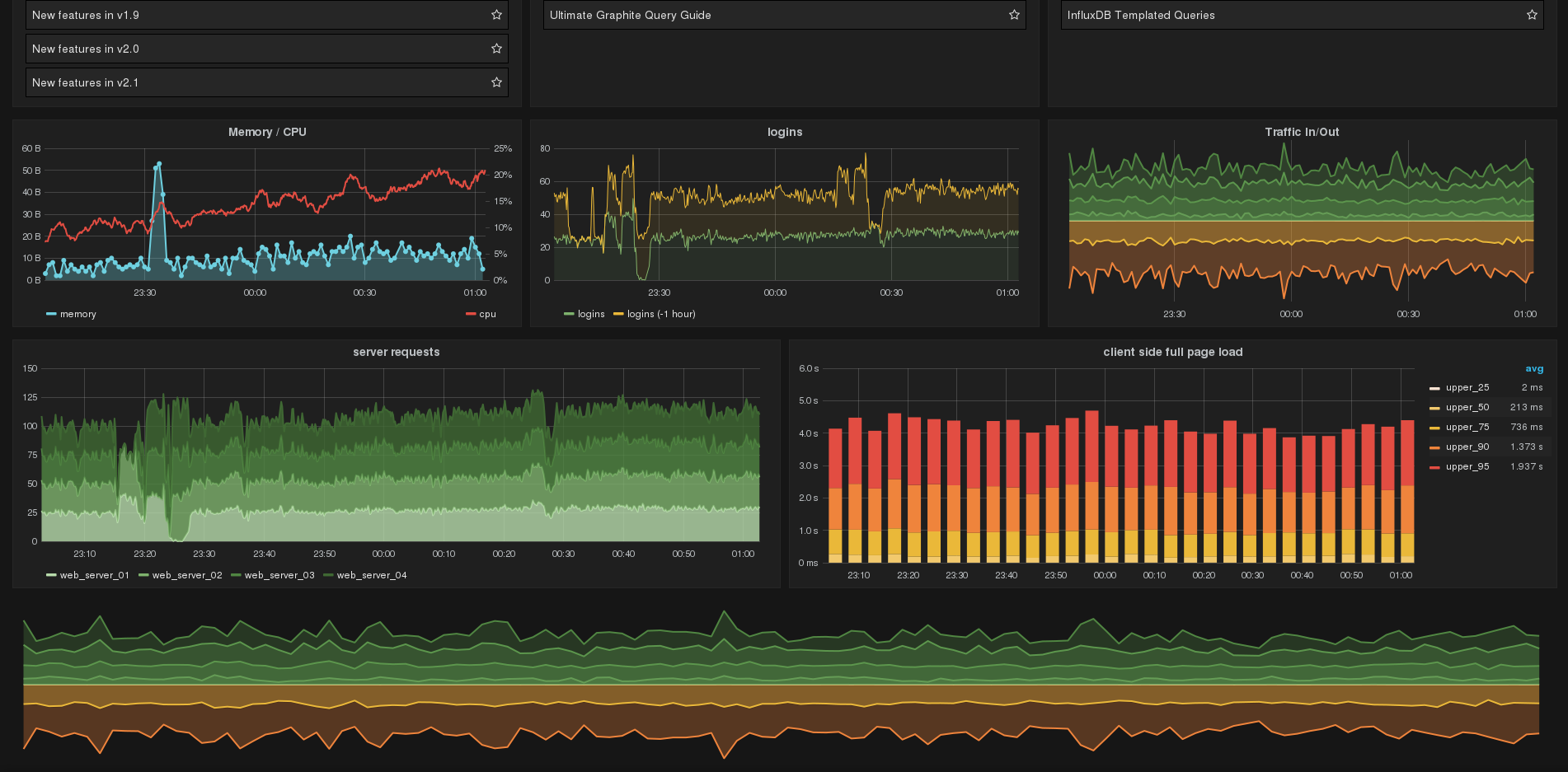

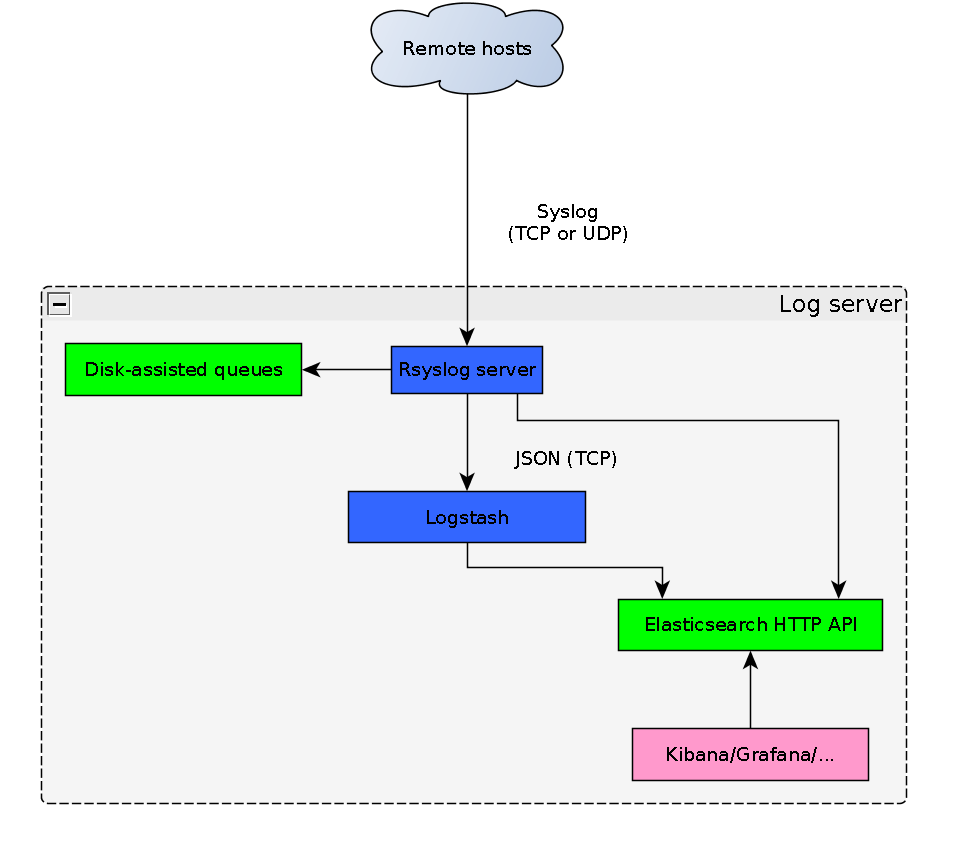

class: center, middle # Elastic is not the answer to everything Things you never wanted to learn Security&Devops-Meetup Vienna, Nov 15th 2017 ------------------------------------------- Volker Fröhlich --------------- --- # Who am I? * Volker Fröhlich * GNU/Linux system administrator * Geizhals.at * Openstreetmap mapper * Fedora packager --- # What I wanted to do in 2013 * Introduce a log server of some kind * Solve a real-world problem * Original goal was to help with mail logs * Let's try ELK, I've heard of that before! # What is in it for you? * How I am interfacing with ELK * A critical look at what Elastic is trying to sell you * Spare you from some of the lessons I had to learn ??? * Not nearly enough time to explain the basics * Probably not every detail is accurate * Problems you wouldn't expect, coming from a SQL background won't touch the persistency question Loosely grouped, sometimes how one problem leads to another Wer war bei den Linuxwochen heuer? Zu der Zeit war ELK schon halbwegs etabliert und vielfach getestet. Keine Ahnung von den Technologien oder auch sonst irgendwas. Elastic war damals auch noch keine Bude (?) und Kibana war noch nicht Nodejs. Monitoring an sich gabs schon. Zabbix-Log-Monitoring war irgendwie fad und das Thema "Syslog-Server" gabs schon mal. --- # What is ELK? * Elasticsearch, Logstash, Kibana * "Elastic Stack" (ELK + Beats) * Collect and process data, store it, visualize it * Free software, but open-core * All components support plug-ins of some kind * Increasingly positioned as the solution to everything, dressed up in fancy words ??? Splunk Geodaten, Zeitserien, Graphen, zu Geschäftsdaten, zu Messwerten, zur Systemüberwachung, ... --- # Marketing quotes * "Elasticsearch Helps **Solve Medical Mysteries**" * "Put Geo Data **on Any Map**" * "Bring Everyone in on **the Goodness**" * "Kibana lets you ... understand **the impact rain might have** on your quarterly numbers." * "It scales horizontally to handle **kajillions of events per second**, while automatically managing how indices and queries are distributed across the cluster for **oh-so smooth** operations." * "Elasticsearch runs the way you’d expect it to. In fact, the **only surprise** should be **how well it all works.**" --- # What are logs anyway? * Log != File * Contain meta-information to actual tasks * Not meant for regular human consumption * Timestamp, origin, context, message * Form and content are up to the developer * Operational messages * Performance data * Error messages, warnings * Debugging information --- # Postfix ``` C690912483F1: to=<root@geizhals.at>, relay=127.0.0.1[127.0.0.1]:10024, delay=18, delays=0.05/0.03/0/18, dsn=2.0.0, status=sent (250 2.0.0 Ok, id=26552-28, from MTA([127.0.0.1]:10025): 250 2.0.0 Ok: queued as 3155B1248447) ``` # iptables ``` IN=ra0 OUT= MAC=00:17:9a:0a:f6:44:00:08:5c:00:00:01:08:00 SRC=200.142.84.36 DST=192.168.1.2 LEN=60 TOS=0x00 PREC=0x00 TTL=51 ID=18374 DF PROTO=TCP SPT=46040 DPT=22 WINDOW=5840 RES=0x00 SYN URGP=0 ``` --- # NCSA logs ``` 10.0.0.137 - - [06/Nov/2015:01:01:07 +0100] "GET / HTTP/1.1" 200 33771 "http://www.geizhals.at/" "Mozilla/5.0 (X11; Linux x86_64) iPad 95"" ``` # Cisco ASA, Apache 2.4 errors ``` %ASA-1-105006: (Primary) Link status Up on interface interface_name. AH00940: %s: disabled connection for (%s)" ``` --- # Jira backtrace ``` 2015-11-07 01:11:00,026 Sending mailitem To=’user@example.com’ Subject=’Some subject’ From=’null’ FromName=’null’ Cc=’null’ Bcc=’null’ ReplyTo=’null’ InReplyTo=’null’ MimeType=’text/plain’ Encoding=’UTF-8’ Multipart=’null’ MessageId=’null’ ERROR anonymous Mail Queue Service [atlassian.mail.queue.MailQueueImpl] Error occurred in sending e-mail: To=’user@example.com’ Subject=’Some subject’ From=’null’ FromName=’null’ Cc=’null’ Bcc=’null’ ReplyTo=’null’ InReplyTo=’null’ MimeType=’text/plain’ Encoding=’UTF-8’ Multipart=’null’ MessageId=’null’ com.atlassian.mail.MailException: javax.mail.SendFailedException: Invalid Addresses; nested exception is: com.sun.mail.smtp.SMTPAddressFailedException: 550 5.1.6 <user@example.com>: Recipient address rejected: User has moved to somewhere else. For more information call Example at +43 123123 or e-mail info@example.com at com.atlassian.mail.server.impl.SMTPMailServerImpl.sendWithMessageId(SMTPMailServerImp at com.atlassian.mail.queue.SingleMailQueueItem.send(SingleMailQueueItem.java:44) ... ``` --- # How to ship the logs? * Beats? Fluentd? Kafka? **Syslog!** * Syslog != /var/log/syslog * Syslog is ubiquitious, flexible and well known * Protocol -- RFC 3164, RFC 5424 * Modern syslogd implementation like rsyslog * Little resource consumption, robust * Many limitations irrelevant or can be solved * Structured logging? cee! ``` Nov 17 12:37:31 myhost syslogtag: @cee:{"key1": "value1", ...} ``` ??? Was interessieren mich eigene Agents und Redis zusätzlich zum Buffern? Ah, ELK kann Syslog? Passt! Und CEE geht auch --- # Logstash (LS) * JRuby data processing pipeline * Ingests data from various sources * Transforms it * Puts it somewhere * "Input, filter, output" * E. g.: Receive syslog, parse messages, store in ES * Grok is one of the highlights ``` %{IPORHOST:host} %{POSINT:duration} seconds ``` * https://www.logstashbook.com --- # Elasticsearch (ES) * Distributed Java NoSQL database * Indices, shards * Based on the full text search engine Lucene * Indices, documents, fields * Inverted index * Read "The Definitive Guide"! * Adds RESTful APIs, aggregations, a DSL and clustering * Everything is done through these APIs --- # Kibana * NodeJS application * Visualize and explore data stored in ES (only)  ??? Was wurde aus den Markern in 3? --- # Grafana * Not an Elastic product * NodeJS application, not open-core * Supports various datasources * Has an API and a plug-in system * Has some authorization support .image-50[] ??? TODO Bild --- .image-70[] --- # Which problems are we facing? * Consequences of Lucene under the hood * Operating and maintaining the stack --- # ES -- Let's store ("index") a document! ``` curl -XPOST localhost:9200/<index>/<type>/?pretty -d '<JSON>' PUT /logstash-2017.11.05/logs/ { "host": "myhost", "severity": "warning", "message": "something went wrong" } ``` * Index is created automatically * Data types are determined somehow * What if I try to index with "severity": 3 now? ??? Beistriche, Kommentare, mit id mit PUT TODO Keine Shell --- # ES -- What does the document look like? ``` curl -XGET localhost:9200/logstash-2017.11.05/logs/AVFgSgVHUP18jI2wRx0w?pretty { "_index" : "logstash-2017.11.05", "_type" : "logs", "_id" : "AVFgSgVHUP18jI2wRx0w", "_version" : 1, "created" : true, "_source" : { "host": "myhost", "severity": "warning", "message": "something went wrong" } } ``` * What happened to our fields? * Mappings and analyzers ??? * Can I get that as a table? TODO Kann _source _all sein? Nein, weil _all tokenized ist, oder? * jq oder anderer JSON-Parser * Analyzer * Template (dynamic, static) ignore_above: 256, "index" "not_analyzed" Pfusch mit den Permissions in dem Kibana-Verzeichnis * Für Update muss Dokument u. U. komplett zum Client Und noch einen anderen Typ, damit man später den Konflikt zeigen kann Beistrich haglich in JSON Lange Query zeigen, die wenig macht Das Mapping sollte nicht zu groß werden. Mapping ist per Index. Bei Indexserien kanns Brösel geben. --- ``` curl -XGET localhost:9200-2017.11.05/logstash/_mapping?pretty ... "dynamic_templates" : [ { "string_fields" : { "mapping" : { "fielddata" : { "format" : "disabled" }, "index" : "analyzed", "omit_norms" : true, "type" : "string", "fields" : { "raw" : { "ignore_above" : 256, "index" : "not_analyzed", "type" : "string", "doc_values" : true } } }, "match" : "*", "match_mapping_type" : "string" ... ``` --- # ... and so on ``` "logs" : { "_all" : { "enabled" : true, }, ... "host" : { "type" : "string", "fields" : { "raw" : { "type" : "string", "index" : "not_analyzed", "ignore_above" : 256 } } }, ... ``` ??? #TODO: Was macht LS heute? --- ``` "With sensible defaults and no up-front schema definition, Elasticsearch makes it easy to start simple and fine-tune as you grow." ``` # What if I made a mistake? * Mapping of the first occurence of a field * Can I change the data type of a field? **No!** * Can I cast a type? **Maybe!** * Can I rename a field? **No!** * Can I add a sub-field? **Usually not!** * Can I add a new field? **Yes** * Can I delete the offending documents and re-index them? **No longer!** ??? * ... or a pre-defined mapping (supports wildcards) Sabotage des Index von morgen, den ich schon vorbereitet und mit Scheiße gefüllt hab, eventuell mit Alias, damit die Template nicht greift beim Anlegen. Zumindest LS haut dann alles weg. Ich bin nicht sicher, was die Bulk-API machen könnte. TODO Join --- # What if I made a mistake? (Cont.) * You must re-index the affected indices * Can I reindex without the _source field? **No!** * Consuming time and space * Is a mess with data pouring in * Is not guaranteed to finish * You can't rename the index, but you can add an alias * Try not to run out of disk! ??? TODO Scroll --- # Document types * Document types are a lie (deprecated in 6.0) > Initially, we spoke about an “index” being similar to a “database” in an (sic!) SQL database, and a “type” being equivalent to a “table”. > This was a bad analogy that led to incorrect assumptions. * They only help to distinguish between different kinds of documents * The mapping must not conflict though --- # Data types * Elasticsearch 5.3 can deal with IPv6 (but not v4/v6) * Only 13 years after PostgreSQL (7.4)! * But the operators in PG look more convincing * If you store IP addresses as such, you potentially can't use wildcards * Forces you to store yet another variant and know in advance --- # Lucene queries ## {"name": "Hansi", "age": 12} > Hansi -- **Match,** Due to _all > name:Hansi and age:12 -- **Match**, but too wide > name:hansi -- **Match** -- Case doesn't matter, at least for analyzed fields > nmae:Hansi AND age:12 -- **No match**, field name typo > age:[12 TO 22} -- **Match**, slightly unconventional syntax --- # Lucene queries continued ## {"hostname": "web-app-01", "client_ip": "103.241.206.252"} (analyzed) > hostname:web-app -- **Match,** but will also return "web-front-01" > client_ip:10.\* -- **Match** ??? TODO Grund für Letzeres? --- # Regular expression support * Yes, somewhat * Regex Query DSL * Always anchored both ways * Only works usefully for non-analyzed fields (same for terms queries) * Length limit * Case sensitivity and Kibana --- # Regex matching ## {"name": "Hansi"} ``` name:/^Han/ -- No! name:/^Hansi$/ -- Forget everything you know about PCRE! name:/Han/ -- Only anchored in the front name:/Han.*/ -- "Hooray" name:/Ha[NR].*/ -- Expression casted to lower case in Kibana ``` ## {"title": "Story of my life", "content": "birth, being a toddler, ..., death"} ``` content:/birth.*death/ -- Sorry, fell asleep listening at some point! ``` --- # Other search issues * Implicit defaults, like "size" ``` SELECT name, COUNT(*) FROM staff WHERE name ~ '^Han' GROUP BY 1; Hannes | 2 Hans | 3 Hanno | 1 ``` * Terms queries don't work like "group by" at all (order, empty buckets, defaults) ``` Emil | 0 <-- ? ``` * JSON Query DSL is hard to get right and the error messages are rarely helpful ??? min_doc_count --- # Access control * There is none * At least you _can_ now _limit_ deletes ``` curl -XDELETE "localhost:9200/*" ``` * Put it on a well-protected network * Put it behind a HTTP reverse-proxy * This still provides no fine-grained control * Use aliases, but they are a mess with index series * Buy X-Pack --- # Documentation quality and getting help * \#elasticsearch IRC on Freenode has 560 people sitting in silence * Documentation doesn't go into a lot of details (snapshots, scripts) * https://github.com/elastic/elasticsearch/issues/7464 -- Where was that documented? ``` < Nodulaire> This doc is a shame: https://www.elastic.co/guide/en/logstash/ current/logstash-settings-file.html. Many options are missing < Nodulaire> The x-pack options should be on the general documentation. Freemium bullshit ``` ??? --- # Constancy * Let's change the REST API in incompatible ways * Oh, dots in field names are OK * You can't have dots in field names * On second thoughts, dots in field names are OK * Oh, here, look: _The_ new script language >[...] two vulnerabilities were due to dynamic scripting, and **we wrote a whole new scripting language** to solve that problem!" ??? Groovy, mvel, mustache, Painless Tribe node --- # Operating Elastic Stack * A huge bundle * Short maintenance cycle * "Elastic stack" version policy ``` < gorkhaan> Filebeat 5.3.1 stopped shipping logs it seems, whereas 5.3.0 still works. Logstash: Updated logstash-input-beats 3.1.12 to 3.1.15 For filebeat-5.3.1: ERR Failed to publish events caused by: write tcp 10.1.20.191:43942->10.96.20.143:5044: write: connection reset by peer ok all works. had to upgrade logstash and all. ``` * Plug-in version policy and management * If the plug-ins fail to update, ... --- # And the rant continues! * Circuit-Breaker, Task API * 32 GB limit * Broken indices are fun --- # And this is for Logstash! * Crashes can be very hard to understand in LS * Plug-ins can kill: output-mail, filter-ruby * Config testing is not worth much * No useful configuration splitting * No introspection * Ugly logging, but you can DOS yourself * UDP listener inacceptable * LS can hang, if ES goes away * ES and Beats increasingly cannibalize LS ??? Restlfest * Kibana index name globbing: logstash-* also matches logstash-testing-* * Changing Kibana? Compile it! * In Chromium besser als in Firefox curl -XGET localhost:9200/my_company/_mapping?pretty { "my_company" : { "mappings" : { "staff" : { "properties" : { "age" : { "type" : "long" }, "name" : { "type" : "string" } } }, "customer" : { "properties" : { "name" : { "type" : "string" }, "place" : { "type" : "string" } } } } } } * **_source:** The original JSON representing the body of the document; Can be disabled * **_all:** A catch-all field that indexes the values of all other fields; Can be disabled Adding up 2 numbers is tricky LS bietet wenig Einblick, neigt zum Verstopfen und hat keine persistenten Warteschlange LS-Configtest wertlos Indiziere nichts, das Du nicht im Original woanders hast Aggregationen in LS schwer Search API: ``` POST /index_name/document_type/_search {DSL JSON} ``` Task API: ``` POST /_tasks/_cancel?nodes=nodeId1,nodeId2&actions=*reindex ``` * Hin und zurück und eine Lösung für alles --- # Conclusions * ES gets the job done, most of the time * Understanding ES is not trivial * Changing anything on a production system is a challenge * Upgrading is not trivial * LS gets the job done, most of the time * Kibana is pretty much necessary for tabular views, but maybe try Grafana!